|

For The Agency | For The Technology Developer | For The Magazine Publisher | For The Individual |

Data Acquisition for the 21st CenturyLike a butterfly emerging from a caterpillar, DAQ technology is ready to take off in new directions The following is a manuscript for an article published in R&D magazine. R&D magazine holds the copyright for the finished article. C.G. Masi, Contributing Editor The start of the 21st Century finds data acquisition technology in an odd position. Over the past (approximately) twenty five years, DAQ technology has grown from the lunatic-fringe of the test and measurement world to become the core architecture for measurement systems. Just about every piece of equipment more complicated than a ball-point pen now incorporates an embedded DAQ system. In the process-control world, systems have nearly all gone to digital-computer-based automation with clearly identifiable DAQ systems providing the feedback needed to keep the systems on track. In the R&D world, DAQ technology for collecting physical-measurement data has become an absolute necessity. Scientists and engineers might consider substituting design calculations for building physical prototypes, but manually collecting data for serious research would be unthinkable. While data-acquisition technology has become ubiquitous in all forms of instrumentation systems, it has managed to do so without quite becoming respectable. The interactive display used to monitor the operation of an automated chemical processing plant, for example, isn't a "Graphical User Interface." That's a DAQ term! Instead, the process-control folks call it a "Human-Machine Interface." The problem, of course, is simply one of perception. Many folks still have the mental image that data acquisition is just "those little plug-in cards." In fact, what has been happening is that DAQ-system developers--that includes system integrators as well as hardware and software vendors--have been pushing the envelope to develop advanced instrument-system technologies. If you look at what the DAQ community is working with now, you will see the technology everyone else will be using some years hence. The best way, therefore, to peer into the future of measurement-system technology, is to ask what DAQ developers are developing. R&D assembled a panel of experts from six companies (Agilent Technologies, Capital Equipment Corporation, Data Translation, IOtech, Keithley Instruments, and National Instruments) that are leaders in developing instrument systems based on DAQ technology, and asked them what the future will bring. Here is what they told us: Where are we now? "In general," says Dr. Michael Kraft, [[[NEED TITLE]]] at Agilent Technologies, Waldbronn, Germany, "instrument control can be implemented at various levels of complexity." As Fig. 1 shows, the level of control affects access to various instrument parameters. It can also influence the level of meta data collected and allows the system to comply with regulations, such as the U.S. Food and Drug Administration (FDA) standard for making electronic records "trustworthy and reliable." In addition, the more advanced levels of instrument control provide diagnostics and feedback for better instrument maintenance.

"Because data acquisition technology is closely coupled to PC technology, the state of data acquisition art typically trails PC art somewhat," says Joseph P. Keithley, who is simultaneously Chairman of the Board, President and Chief Executive Officer of Keithley Instruments in Cleveland, Ohio. Keithley finds that a major challenge for data acquisition designers is to accommodate the rapid changes that are so much a part of the PC industry. While these changes have opened up opportunities, such as web-based measurements, data acquisition cards and systems must undergo significant design changes to take advantage of the new technology. "Many data acquisition and test system users resist this change," he observes, "particularly when they have a significant investment in a particularly type of hardware or software." For example, given the length of time that PCIbus computers have been available, ISA products ought to be nearly extinct. But, many customers continue to invest in industrial computers with ISA slots so they can run legacy software and associated hardware that has been thoroughly debugged. They know what works well and don't want to invest additional time in getting a new system design to operate properly. "On the other hand," Keithley continues, "newbies (those unburdened by legacy system issues) are more likely to adopt the latest technologies and design their data acquisition or test systems around them. From a market segmentation perspective, we will continue to see a spectrum of users that range from early adopters of the latest technologies to those sticking with technologies that are two or three generations behind, all of which involve the various form factors." Sid Mayer, President, Capital Equipment Corporation (CEC), Billerica, Mass. notes that DAQ hardware and analog-to-digital converter technologies are fairly mature. He feels that improvements will come mostly as a continuation of price/performance trends and interface bus and cable options. "This is still evolving with higher speed, higher resolution, and low power components," Tim Ludy, Product Marketing Manager , Data Translation, Marlboro, Mass. observes. "A good example is Data Translation's newest USB module that has 4, 24-bit ADCs that run independently up to just under 1,000 S/sec." "Presently," Tim Dehne, Vice President of Engineering at National Instrument in Austin, Tex. says, "users are selecting vendors based on the solution that will cause the least amount of problems for them. Flexible software, easy integration, and connectivity between the sensors and hardware will remain key factors for data acquisition customers when selecting components for their DAQ system." "Software and system integration, however, still has lots of room for improving ease of use," Mayer points out. Ron Chapek, [[[NEED TITLE]]], IOtech, Cleveland, Ohio summarizes the currently operating Market trends as follows:

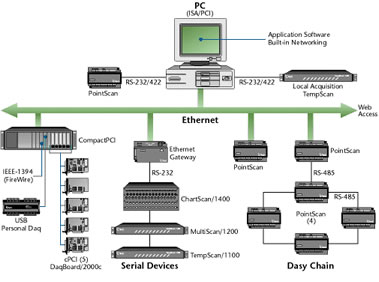

"Many, if not most, users are looking for easier and faster implementation of their data acquisition systems," Keithley observes. "To a great extent, plug-in cards are only building blocks of a do-it-yourself systems. The builder also needs to buy and integrate separate signal conditioners, a variety of low-level device drivers, and other components. In today's business environment, many users don't have the time or inclination to do this. "They don't want to be data acquisition system designers. They want to get on with their measurements and get their jobs done faster. They need products that simplify the process of applying data acquisition systems in difficult and demanding test environments, such as those found on production and process lines. The influence of these largely unmet demands on data acquisition product development is growing, and probably will become dominant in the near future." DAQ in the near future For the next year or so, continued cost reduction, moves toward open software standards, further development of Ethernet/web-enabled instrumentation, and wireless data links will be the big technological trends, according to Chapek. Others agree. Software will certainly be a major driving force. "Customers treat DAQ hardware as somewhat of a commodity item," Mayer points out, "with many vendors close in hardware features and price. The rest of the ease-of-use equation becomes a deciding factor." Ludy says that the need is for application software that is easier to use with less programming effort needed to get the users' applications running and getting their product to market sooner. "I see this as a year of return to pragmatism and less technology hype," Dehne says. "Software's quality and usability will be paramount in a user's decision on which hardware vendor is selected. Users will gravitate to the vendor that gives them the fewest problems with completing their DAQ applications." Mayer sees a need for further improvement in system integration and multi-vendor support as well: "Few applications of any size can be completed with only a single source solution, and users continue to find it a challenge to master multiple sets of documentation and programming standards." "PCs are closing up," Ludy adds, "and users will need to replace current solutions with DAQ products that can plug in tomorrow's personal computers." "In the next year, National Instruments expects that the last of the ISA users will finally transition to the PCI bus, thus taking advantage of its higher-bandwidth and plug-and-play capability for easier installation and use," says Dehne. He also predicts that users will embrace the easy connectivity offered through modern DAQ devices. Users will begin realizing the true benefits provided by internet-based measurements. That technology will be used commonly by engineers and scientists rather than primarily by early adopters. High-performance, portable solutions based on CardBUS or IEEE 1394 will deliver PCI-like performance for laptops. The market will also expand into the higher-speed realm. Simultaneous sampling will be a requirement of future DAQ system architectures that will operate at sampling rates beyond 1 MS/sec. Developers integrating larger systems that involve different measurement devices will require very precise synchronization. They will look to the backplane, triggering, and performance capabilities of PXI/CompactPCI platform to meet their needs. CEC's Mayer agrees that alternatives to plug-in cards are important to DAQ's future: "The newer packaging and form factors, such as USB, perhaps FireWire, and definitely Ethernet, are becoming price competitive enough to be used in many places where plug-in boards might have been used before." Chapek expects eventually to see ONE architecture, such as that shown in Fig. 2, for pilot plants, test floors, R&D labs and production applications. "This split has been artificial," he says, "and the walls are coming down!"

For example, Rockwell/A-B owns 50% of the large programmable logic controller (PLC) market in North America. However, the days when they could charge a 35% premium and supply the entire solution, including sensors, communication protocol, PLC, I/O peripherals, HMI, etc., as part of a closed architecture system are for the most part over. They must now provide "best-of-breed" components for each function. Open standards for communication protocols have opened the door for such multi-vendor PC-based solutions. The DAQ industry, according to Chapek, needs to move in the same direction as the industrial-automation market. That is, DAQ vendors must embrace open architectures, leverage commercial standards, adopt software standards, offer Ethernet connectivity, and provide multi-vendor solutions. While Chapek stated this concept clearly, it resonates with comments made by the other experts. All seem to agree that the DAQ's future includes a generous dose of Web technology. "Embedded Web technology," says Mayer, "has allowed the creation of a new type of DAQ 'appliance' that is truly plug-and-play without software installation (for example, CEC's web-DAQ/100)." Not only is no software required for basic operation and download of data, but such products are inherently platform-independent, being equally at home with Macintosh or Linux systems as well as Windows. Off-the-shelf network technology makes it easy to route and connect devices, and even create private wide-area-networks with security features. Keithley generally agrees with all these comments, but cautions that customer demand is likely to have the greatest influence on system features and functions, as well as the technology used to supply them. "I believe that more manufacturers will begin to realize that adopting the latest technology may or may not help them get their new products to market any faster," he says. "The key will be whether or not the technology helps quickly create useful data collection systems. Until recently, the main market driver has been manufacturers pushing a technology, as opposed to customers pulling. This situation is beginning to be reversed." Three Year Outlook When we start looking a little bit farther out, technologies and factors that we only hear about now should start becoming important drivers of DAQ technology. These include:

A major change that will affect DAQ-system design is the arrival of slotless PCs. Keithley points out that manufacturers of plug-in boards will have to react to that change by exploiting other form factors, such as external chassis systems, if they wish to continue supplying board-level products. "The key feature in successful DAQ products," Mayer predicts, "will be integration of standards for getting information from the sensor front-end and associated electronics up to the point of consumption." Simple plug-in boards with proprietary programming interfaces will still have market momentum from existing applications, but new applications will demand a higher level of software capability. "Products that rival, and in many cases exceed, the performance of today's stand-alone products will dominate the DAQ solution landscape," says Dehne. He and Ludy both feel that the trend towards higher dynamic range products will continue, pushing 14 and 16-bit products to higher and higher speeds, providing more memory in the data path, and additional signal processing power integrated into high-speed silicon. "With the increasing power of FPGAs (field programmable gate arrays)," Dehne says, "we will see complex triggering, user-configurable processing, and even reconfigurable hardware make their way into data acquisition products of the future. Sophisticated synchronization technologies will also be available, so users can create larger, more sophisticated DAQ systems. Finally, flexible-resolution products that leverage advanced signal processing technology, will emerge so users can programmatically trade speed for accuracy." Dehne also expects DAQ systems to become more distributed. Networking technologies, in particular wireless technologies, have the potential to simplify the installation and maintenance of data acquisition systems, making them accessible across a company's enterprise. Others agree that web-enabled instrumentation will become more common. Kraft, in fact, predicts that DAQ systems will become fully web-enabled over the next three years. Bandwidth will expand from 10-100 Mb/sec to even higher rates via optical devices. Expansion of web-enabled instrumentation will force the DAQ community to develop means to provide privacy and security to instrument communication. Kraft believes these security systems will start moving out of the application (browser) software and into the communication protocol. "Smart sensors are also an interesting area with a potentially dramatic impact on DAQ systems," says Dehne. "Currently, no global standard exists for communicating to smart sensors." Until a universally accepted standard becomes reality, the industry will remain fragmented and its impact on traditional data acquisition will be limited. Another technology needed to make smart sensors an important part of the DAQ landscape is MEMS (microelectromechanical systems) fabrication. Using MEMS architecture, designers can pack more functionality into a smaller real estate area. MEMS technology can also be applied to other parts of the data acquisition process, providing higher single-board densities and more on-board isolation solutions. Kraft believes that analytical-instrument vendors will have to start making shared software and communication components available to support open systems. "Linking vendor-independent analytical instruments together without individual engineering efforts" will be a major unmet challenge, he says. Mayer believes that it will be easier to combine and program a variety of devices in an application, but real plug-and-play in a multivendor sense will still be developing. As distributed and interconnected DAQ systems become more common, a need will arise for new ways to synchronize and coordinate these devices. Looking into the Distance Kraft makes a number of individual predictions for what the DAQ landscape will look like five years from now:

"While it is hard to predict 5 years away exactly what products will have as built-in features," says Mayer, "it is guaranteed that user demands will always outstrip product features in the area of software tools." In addition, there will still be a need for better hardware solutions at the most basic level of interconnecting sensors, signal conditioners, and DAQ devices. Each vendor has some piece of the answer, but Mayer predicts that users will still be wrestling with screw terminal pinouts, soldering point-to-point wiring and creating special adapters. "There is a great opportunity here for the right set of interconnect products," he says, "but the multiplicity of vendors and types of hardware required make this a daunting challenge." Ease of customization and programming will have made significant strides by then, engendering a shift in emphasis to the need to allow end-user manipulation and reporting of results. Programmer's jobs will have become somewhat easier, but the ever-changing demand for new ways of viewing and crunching numbers will by no means have disappeared, and the more of this ability can be put in non-programmer's hands, the more successful a product will be. The market demand for easy reporting and display will begin to be met by the convergence of DAQ and data-mining technologies. As DAQ devices use standards based on the Web, such as extensible markup language (XML) or its successors, tools that come from the business data-mining and visualization side will be applicable directly to DAQ results by the consumers of the information. Keithley predicts that less demanding measurements (8- to 12-bit resolution) will increasingly become commoditized. "If the past is any indication," he continues, "we can expect the manufacture of such systems to move offshore. To stay in business, domestic manufacturers will have to upgrade the value and capabilities of their systems." Ludy points to additional pressure that will come to bear on DAQ manufacturers. "Products are required to pass very stringent tests to pass FCC and CE compliance," he says, "and these are getting more difficult to pass. For example, to pass CE tests, our products need to be able to withstand a 4 to 8 kV transient discharge directly to the I/O pins--and keep running! "Today, there are many smaller companies suppling cheaper non-compliant products. With so many components getting harder to acquire and manufacturing lead times getting longer than some people's careers, companies cannot afford to risk dealing with these smaller companies." Chapek says that companies are looking to buy from long-term partners that reduce their short-, medium- and long-term investments. They want risk and cost reduction. Staffs are thin and they cannot afford to devote limited resources to protracted integration efforts or unreliable hardware. They need to work with companies that provide offerings that are easy to integrate, but that can also be quickly and easily adapted to meet changing application requirements. Only an open system architecture that can accommodate third party software and hardware can meet these needs. Clearly, Kraft points out, only those vendors of analytical instrumentation who are able supply components, products or solutions which can be linked together, and where the whole system provides more value than the sum of all pieces, will do well. The result, Mayer concludes, is that there will be some shakeout of conventional DAQ vendors. Those adapting and driving the new software tools and standards will extend their success. Others will fall by the wayside. "Opening up computers and configuring add-ins to do DAQ will have become obsolete," he says. "This doesn't mean every single such product will go away, but such devices will be clearly the 'old way.' Such technology will not be used if at all possible." Summary "The most revolutionary changes in DAQ in the coming times will be powered by the explosion of Web-based standards for information interchange," Mayer predicts. Kraft agrees. "While in the past instruments have been controlled through a local user interface, and data have been collected through A/D converters," he says, "instruments are now controlled through the DAQ System." This allows full documentation of method and sequence parameters and also records any specific events, failures and instrument specific information, such as serial numbers, for compliance reasons. It also enables a much higher degree of automation to increase sample throughput. TCP/IP communication also enables wireless communication, which allows the analyst to control and monitor the measurement process from any place. Finally, vendor-independent communication protocols and data-file structures will allow users to mix and match instruments and data acquisition systems from different platforms and vendors. "The infrastructure of the Web is already ubiquitous," Mayer observes, "fueled by conventional business applications." Combined with recent developments in embedded-system technology, it has become possible to cheaply put a complete web server right near the sensors and A/D hardware--anywhere the signals may be. In the past, a wide variety of proprietary busses and serial port approaches have been used to cable devices together, but web technology now makes it trivial to wire and route information across a room, factory, or continent. A wide-area intranet can be created with off-the-shelf components and no need for private long distance wires, even when you want a secure connection. Web standards go beyond the hardware connection as well. By sourcing DAQ information directly in standard formats such as web pages, e-mail, or FTP file transfers, there is less need to write custom programs to consume and process the data. Developing standards, such as XML, are paving the way for exchange of meaningful structured data that can be pulled instantly into databases and reports without conventional programming. While the "little plug-in cards" may be not long for this world, data acquisition in the larger sense of computer-based measurement systems is really just getting started. Levels of Instrument Control

|

|

Home | About Us | Technology Journalism | Technology Trends Library | Online Resources | Contact Us For The Agency | For The Technology Developer | For The Magazine Publisher | For The Individual © , C. G. Masi Technology Communications, Privacy Policy P.O. Box 10640, 978 S. San Pedro Road, Golden Valley, AZ 86413, USA Phone: +1 928.565.4514, Fax: +1 928.565.4533, Email: cgmasi@cgmasi.com, Web: www.cgmasi.com Developed by Telesian Technology Inc. |